EPD Development Mistakes That Delay Verification | How to Avoid Rework and Audit Risk

Primary Keywords:

EPD verification delays

EPD development mistakes

Environmental Product Declaration errors

PCR interpretation risk

multi-site EPD modeling

EPD data quality

EPD Development Mistakes That Delay Verification (And How to Avoid Rework)

Environmental Product Declarations (EPDs) are increasingly required for:

Green building procurement

Public infrastructure tenders

EU taxonomy alignment

Scope 3 supplier transparency

Carbon disclosure credibility

Yet many EPD projects stall during third-party verification.

Not because of formatting issues.

Not because of minor calculation discrepancies.

But because of structural methodological weaknesses.

Below are the most common technical failures that delay verification — and how to avoid rework.

Allocation Decision Risk

The Problem

Allocation errors are among the most common causes of verification delays.

Frequent issues include:

Inconsistent allocation rules across life cycle stages

Economic allocation used without justification

Mass allocation misapplied in multi-output systems

Failure to follow PCR-specific allocation hierarchy

Lack of documented sensitivity analysis

Verification bodies require defensible reasoning aligned with:

ISO 14044

EN 15804

Applicable Product Category Rules (PCRs)

If allocation logic cannot be justified and documented, verification halts.

How to Avoid Rework

A verification-ready allocation framework must include:

Clear identification of multifunctional processes

Hierarchical decision tree (avoid → physical relationship → economic)

PCR alignment cross-check matrix

Sensitivity scenario documentation

Transparent calculation log

Allocation must be treated as a governance decision — not just a modeling input.

PCR Misinterpretation

The Problem

PCRs are often treated as checklists rather than as binding methodological documents.

Common errors:

Incorrect declared unit selection

Wrong system boundary modules (A1–A3, A4, C, D)

Improper use of Module D credits

Missing scenario documentation

Failure to reflect updated PCR versions

Even small PCR interpretation errors require full model revision.

This is where projects lose weeks.

How to Avoid Rework

Before modeling begins:

Conduct structured PCR interpretation review

Map each PCR clause to the modeling requirement

Validate cut-off rules

Confirm database compatibility

Align scenario assumptions

A formal PCR scoping review prevents downstream redesign.

Multi-Site Modeling Errors

The Problem

For manufacturers with multiple production facilities, errors commonly include:

Averaging data across sites without representativeness analysis

Ignoring regional energy mix differences

Missing transportation differentiation

Failing to justify site grouping methodology

Verification requires defensible representativeness.

Without structured documentation, grouping assumptions are rejected.

How to Avoid Rework

Multi-site governance must include:

Site representativeness matrix

Weighted production volume methodology

Regional grid factor differentiation

Data completeness scoring

Transparent justification memo

Multi-site EPDs are governance exercises — not spreadsheet consolidations.

Data Quality Gaps

The Problem

Data quality deficiencies delay verification more than calculation errors.

Common weaknesses:

Outdated emission factors

Mixed temporal datasets

Lack of a supplier-specific data improvement roadmap

No uncertainty classification

Missing primary data documentation

Verification bodies increasingly assess:

Temporal representativeness

Technological representativeness

Geographic alignment

Completeness

Reliability

Without a data governance structure, the verifier requests revisions.

How to Avoid Rework

Implement structured data governance:

Data source classification system

Primary vs secondary hierarchy

Temporal validation protocol

Data quality scoring table

Documentation archive with traceability

EPD verification is documentation-intensive.

If traceability fails, the EPD fails.

The Real Cause of EPD Delays

Most EPD delays occur because development is treated as:

“Model → Calculate → Submit”

Instead of:

“Scope → Govern → Model → Document → Validate → Verify”

Verification readiness must be engineered from the beginning—not corrected at the end.

Enterprise EPD Governance Framework

A defensible EPD program requires:

Structured PCR scoping review

Allocation decision log

Data governance protocol

Multi-site modeling architecture

Pre-verification technical review

Version control documentation

Organizations that implement governance upfront reduce:

Verification cycles

External review comments

Rework costs

Publication delays

Tender submission risk

When Should You Seek Structured Advisory Support?

You should consider structured EPD advisory if:

Your product system has multiple co-products

You operate across multiple production sites

You are preparing for EU construction markets

You lack internal LCA governance expertise

You have experienced verification delays previously

EPD execution without a governance structure increases exposure to:

Tender disqualification

Publication delays

Credibility erosion

Internal resource waste

Request an EPD Scoping Review

Before investing in full modeling, many organizations benefit from a structured EPD scoping review.

This includes:

PCR interpretation validation

Allocation risk assessment

Multi-site modeling strategy

Data quality gap evaluation

Verification readiness review

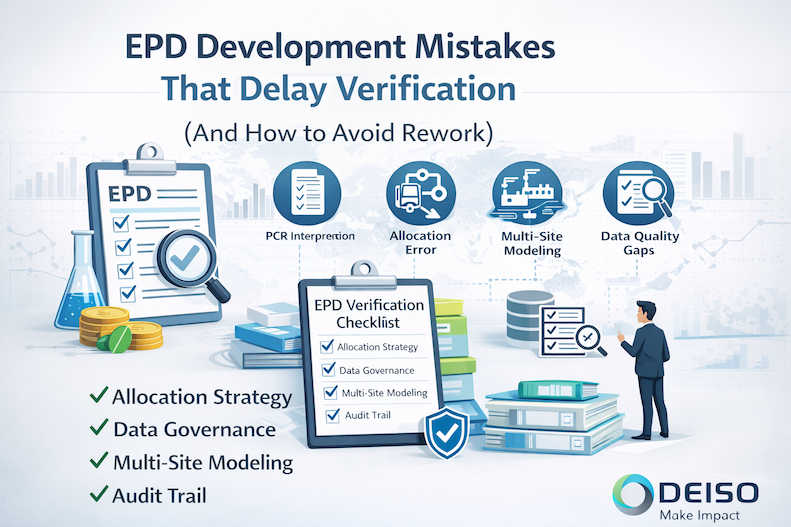

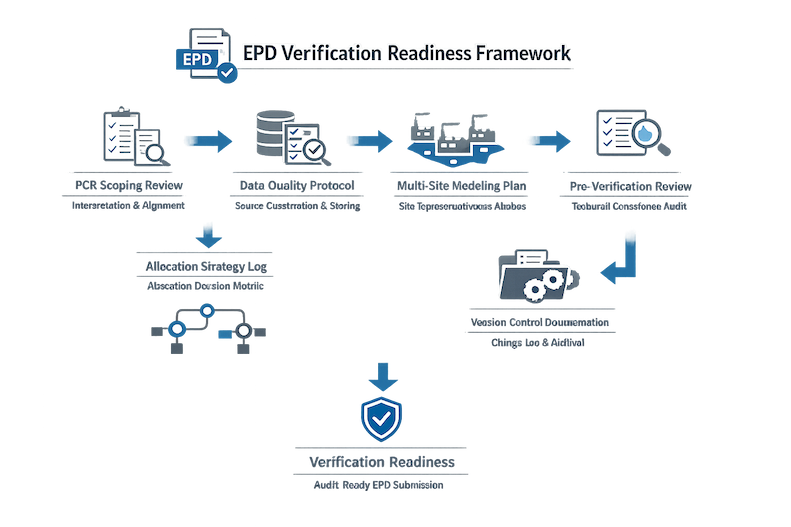

EPD Verification Readiness Framework

This infographic illustrates the structured pathway to developing verification-ready EPDs. It moves from PCR scoping and allocation governance through data quality control, multi-site modeling discipline, pre-verification review, and version-controlled documentation — culminating in an audit-ready submission.

The framework emphasizes that EPD success depends not only on modeling accuracy but also on documented decision logic, traceability, and governance architecture aligned with ISO 14044 and applicable PCR requirements.

Decision Matrix — EPD Verification Delay Risk (and What to Do)

The decision matrix highlights the most common technical and governance failures that delay EPD verification, ranking them by risk exposure and typical verifier findings. It provides a structured control response for each risk area, enabling manufacturers and consultants to eliminate rework before submission proactively.

Used correctly, this matrix supports faster verification cycles, stronger documentation defensibility, and reduced tender risk exposure.

| Risk Area | Typical Trigger | Verification Delay Risk | What Verifiers Commonly Flag | DEISO “Do This First” Control |

|---|---|---|---|---|

| PCR interpretation | PCR version mismatch; wrong declared unit; wrong module coverage (A1–A3, A4, C, D) | Very High | “PCR requirement not met”, “module/scenario documentation insufficient” | PCR scoping review + clause-to-model mapping before modeling |

| Allocation decisions | Multi-output processes; recycled content; co-products; unclear cut-off rules | Very High | “Allocation not justified”, “hierarchy not followed”, “sensitivity missing” | Allocation decision log + sensitivity cases aligned to PCR/ISO |

| Multi-site modeling | Multiple plants; averaging without representativeness; grid mix ignored | High | “Site representativeness not justified”, “weighted averaging unclear” | Site representativeness matrix + weighting method (volume, technology, geography) |

| Primary data completeness | Missing measured energy/materials; weak metering; supplier data gaps | High | “Primary data insufficient”, “data sources not traceable” | Data inventory + traceability pack (sources, timestamps, units, QA notes) |

| Secondary data / EF control | Mixed databases; outdated emission factors; inconsistent versions | Medium–High | “Database version inconsistency”, “EF choice not justified” | Emission factor register + version control (change log, rationale) |

| Scenario documentation | Transport/end-of-life assumptions not documented; Module D credits unclear | Medium–High | “Scenario assumptions not transparent”, “Module D not defensible” | Scenario set + documentation annex (assumption table + references) |

| Model reproducibility | No calculation trail; no change log; unclear unit conversions | High | “Cannot reproduce results”, “insufficient audit trail” | Model audit trail pack (inputs, calculations, unit checks, reviewer notes) |

| Internal QA before verifier | No pre-check; rushed submission | High | Numerous corrective actions; iterative rounds | Pre-verification technical review (structured checklist + evidence bundle) |

EPD Pre-Submission Checklist (Before Sending to Verifier)

This checklist provides a structured, verification-focused control framework to review an Environmental Product Declaration before submission. It consolidates critical technical checkpoints — PCR alignment, allocation governance, data quality validation, multi-site representativeness, scenario documentation, and audit traceability — into a single defensible review layer.

Used before engaging the verifier, this checklist reduces corrective action cycles, prevents avoidable rework, and strengthens the methodological credibility of the EPD submission.

| Checklist Area | What “Done” Looks Like | Evidence to Attach | Common Verifier Pushback if Missing |

|---|---|---|---|

| PCR selection & version | Correct PCR selected; version/date recorded; program operator rules confirmed | PCR PDF link/version note; PO guidance reference | Wrong PCR/version → rework required |

| Declared unit & product definition | Declared unit matches PCR; product variants and scope are unambiguous | Product spec sheet; declared unit rationale | Declared unit inconsistent |

| System boundary modules | Modules (A1–A3, A4, A5, B, C, D) correctly included/excluded per PCR | Boundary diagram; module inclusion table | Missing modules / wrong exclusions |

| Cut-off rules | Cut-offs applied exactly per PCR; exclusions documented and justified | Cut-off memo; excluded flows list | Cut-offs not compliant / undocumented |

| Allocation decision log | Multifunctionality identified; allocation hierarchy followed; sensitivities done | Allocation log + sensitivity results | Allocation not justified |

| Data inventory completeness | Primary data coverage adequate; data gaps identified; substitutions justified | Data inventory sheet; data gap register | Primary data insufficient |

| Data quality assessment | DQI/quality scoring completed (temporal/geographic/tech/completeness) | DQI table; source list with dates | Data quality not demonstrated |

| Database & EF version control | Database name/version fixed; EF sources consistent; change log maintained | Database version screenshot; EF register | Inconsistent datasets / versions |

| Multi-site modeling governance | Site grouping/weighting method defined; representativeness justified | Site matrix; weighting calculation | Averaging not defensible |

| Energy & electricity modeling | Electricity mix correct by site/year; approach documented where relevant | Grid factor sources; site electricity bills | Wrong electricity factors |

| Transport scenarios | Transport distances/modes documented; scenarios align with PCR | Transport assumptions table | Transport scenario not evidenced |

| End-of-life & Module D | EoL scenarios transparent; Module D method compliant; avoided burden justified | EoL scenario table; Module D rationale | Module D credits not defensible |

| LCIA method compliance | LCIA method matches PCR/EN 15804 requirements; factors aligned | LCIA method statement; tool settings | Wrong impact method / factors |

| Units & conversions QA | Unit consistency verified; conversion factors documented; no hidden assumptions | Unit check sheet; conversion notes | Calculation not reproducible |

| Model reproducibility pack | Independent reviewer can reproduce totals from inputs | Exported model; parameter list | No audit trail / cannot reproduce |

| Draft EPD narrative consistency | EPD text matches model (boundaries, scenarios, data periods, sites) | Cross-check notes; redline review | Narrative conflicts with model |

| Pre-verification internal review | Structured internal review completed; corrective actions logged | Review checklist; action log | Too many avoidable NCRs |

To request a structured EPD Scoping Review:

https://dei.so/contact-form

For targeted technical guidance, hourly advisory is also available:

https://dei.so/life-cycle-assessment-lca-per-hours-consultation-service

Share this:

- Email a link to a friend (Opens in new window) Email

- Share on LinkedIn (Opens in new window) LinkedIn

- Share on X (Opens in new window) X

- Share on Facebook (Opens in new window) Facebook

- Share on WhatsApp (Opens in new window) WhatsApp

- Share on Reddit (Opens in new window) Reddit

- Print (Opens in new window) Print

- More